So Norbert Wiener's Cybernetics gets a full page review in the 27 December issue of Time. I'm a bit worried that it'll hit Newsweek mid-January, and we'll miss it, and there's an important point to be made. With President Obama recently in the news warning brain workers that they'll all be out of a job real soon now due to artificial intelligence and stuff like that, I don't want to miss the moment!

"Engines and production machines replace human minds; control machines replace human brains. A thermostat thinks, after a fashion. It acts like a man who decides that the room is too cold and puts more coal on the stove. Modern control mechanisms are much better than that. Gathering information from delicate instruments (strain gauges, voltmeters, [illegible] tubes) they act up --and more quickly and accurately than human beings can. They never get sick or drunk or tired. If such mechanisms are properly designed, they make no mistakes.The binary "'yes' or 'no'" bit suggests an awareness of digital computing, but in another part of the story, Wiener compares insanity in human brains to instability in machines.

When combined in tightly cooperating systems, such machines can run a whole manufacturing process, doing the directing as well as the acting, and leave nothing for human operatives to do. Technologically (if not politically), whole automatic factories are just around the corner. Squads of engineers are excitedly designing mechanisms for them."

. . . [Modern calculators are] "built chiefly of electron tubes which give a simple 'yes' or 'no' answer when stimulated by means of electrical impulses. This is roughly what the neurons (nerve cells) of the human brain do . . . The modern industrial revolution is . . . bound to devalue the human brain at least in its simpler and routine decisions . . . The human being of mediocre attainments or less [will have] nothing to sell that is worth anyone's money to buy."

(The bridge is bipolar!)

My takeaway from that is that Wiener is not giving up on his analogy between analog computers and human brains. "Cybernetics" is going to go down with the analog computing ship, clearing the way for many cycles of digital computer enthusiasts warning that "artificial intelligence is going to take your jobs."

With Shockley, Bardeenn and Brattain also in the news this month, it's time for another cut at how that came to happen, and some social commentary on top.

The actual history of the transistor is well enough known, although somewhat masked by insistence on heroic legend. Julius Lilenfeld carried German work over to the United States in 1926, but made the mistake of doing his work at a lab owned by Magnavox, which failed to make it through the Depression. Given how little public fuss he made about the subject, I imagine that Dr. Lilienfeld was well compensated by Bell for not getting in the way of its legend. The American Physical Society's account focusses on the corporate incentive to develop the transistor, and the "all-star team" that was assembled to create a semi-conductor based amplifier. Such an amplifier could boost telephone signals and potentially extend telephone networks. (Alaina Levine, writing for the APS, is apparently unaware that long distance was already a thing in 1948, or it might not say that "vacuum tubes were not very reliable, and they consumed too much power and produced too much heat to be practical for AT&T’s needs." It would be more accurate to say that something better was called for.

In Levine's version, William Shockley is also almost absent from the Bell Labs setting while Bardeen and Brattain take the first, crucial steps. Shockley is their director, but makes only rare appearances at the lab in New Jersey where his lesser partners are working on turning his brainstorm into working hardware. It was, crucially, because Shockley took their work and dramatically improved on it during two weeks spent in Chicago, attending the convention of the APS, that he was reinserted into the story. (A year later, he published the general form of their nascent semiconductor theory.

I am not pointing this out to take credit from Shockley. What I'm getting at is the strange absence of his war work. I get the vague impression that he might have been fired from the Antisubmarine Warfare Operations Research Group at Columbia University, since he is listed as doing naval operations research from 1942 until 1944, and then as an expert consultant to the office of the Secretary of War from 1944 to 1945, which sounds suspiciously as though it is not a real job. Random slander of the clearly-on-the-autism-spectrum dead aside, my point, such as I have one, is that Shockley was well acquainted with ASW work, and thus, presumably, with the quartz crystal transducers used in wartime sonar equipment. As John Moll's biographical memoir makes clear, wartime improvements in silicon and germanium point contact detectors made new experiments practical. I should mention here that Moll specifies point contact detectors because this was the specific application that the Shockley group was working on. Elsewhere he notes rectifiers, which I wanted to point out as evidence that I'm not entirely crazy!

So there's an absent presence here: the state. This is also true of Norbert Wiener, another of science's square pegs in round holes, who has somehow been extruded from MIT's effort to create a antiaircraft fire control system capable of accepting "lock on" from a radar. As I try to make heads and tails of early postwar American naval building, it seems as though the resulting Mark 56 Gun Fire Control System first went to sea on the Mitschers of 1949, although this must be wrong, because the tradition is that the '56 was ready in time to fight kamikazes. Anyway, official credit for the Mark 56 goes to Ivan Getting and Antonin Svoboda, and Svoboda returned to Prague in 1946. Getting's career gets lost in a haze of impressive CV line entries in the late Forties, but I wouldn't be completely surprised if he was at the door to show Wiener the way out in '47. As far as I can tell, Wiener then spent the rest of his life gassing about neurons being analog computers and spending the royalties from Cybernetics.

So we have here two developments in science that we are aching to connect, but which just do not seem to be coming together yet. Shockley, Bardeen and Brattain are not working on computing. Their semiconductor amplifier isn't a proof of concept, as we understand it looking back. It was the whole point of their research. A better amplifier was that important. The fact that it was a species of logic gate, and that it would form the basis of printed logical circuits doesn't even seem to have become obvious until people began to approach the problem as one of replacing vacuum tubes with a superior technology in actually existing digital computers.

If you're like me, you grew up reading that "explosive lenses" are fundamental to the design of atom bombs, starting with Nagasaki's Fat Man. You were also told that the math of it all is a great deal more complicated than any other part of atom bomb making. And, yes, John von Neumann was involved. The basic concept is that you wrap the core of the bomb with explosives that all implode inward on detonation to compress it together with perfect evenness. Since these pieces of explosive cheese aren't obviously "lenses" in the optical sense (my glasses aren't exploding right now), you would conclude, knowing physicists, that there is some kind of physical analogy underlying the terminology.

Which, of course, there is. Light, or a wave of hot, expanding gas, is conveniently described by the wave equation, which I am just going to lift from Wikipedia like the laziest bastard who ever blogged:

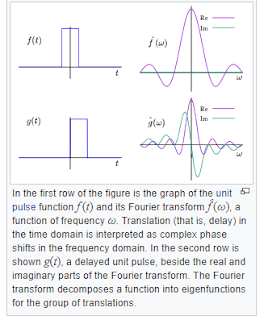

This is the time-independent version, the Helmholtz equation, although if good old Herman would have recognised it in this form, I'm a monkey's uncle. You don't have to do any of this goofiness in analysing what happens when a regular light wave hits the centre of an optical lens. Simple ray tracing will do just fine. Unfortunately, that won't serve when you get close to the edge of the lens, and this is where old time glasses will start to show annoying fringes, while would-be atomic devices show backsplashing, neutron-spitting, white hot plutonium. (Which is not as much fun as it sounds.) The way to solve this is, well, to vary the thickness of the glass (or explosives) away from the simple geometric curve that serves in the centre. So get out a knife, and shave some stuff off, because, look, we're talking about physicists here. You want "subtle," go talk to a wizard or something. The trick is to figure out just how much to shave off to maintain the same solution as you reach the edge of the lens as at the centre. If only there were a way to turn a wave into a discrete pulse going in a specific direction, the way that a lens might be said to "decompose" a complex signal into a series of discrete pulses, adjust their direction, and recompose them into a new wave.

Which there is. Here, let me steal from Wikipedia again, since there is no way I am relearning this math after thirty odd years, and no way that you're going to sit still to read it if I did, or even could:

The Fourier transform is the purely mathematical version of what a lens does. And it is inherently digital.

My last technological appendix was on about this, too, discussing the lens in the Xerox 914 that makes it possible for an office machine to reproduce a legal sized document without the text curving up to meet the margins. I doubt that the work at Xerox had much to do with the national security sector's advance towards digital computing, but I strongly suspect that spy camera work does. Cold war secrecy makes it a bit hard to make out what comes between the RAF's F52 camera, which, as far as I can tell, was still the main system for PR Mosquitoes as of Christmas of 1948, but following up on that took me to some grognards trying to sort out a war surplus "F60," which seems to have been used to take photographic records of H2S displays:

The F60 was a conventional, although very deluxe Kodak camera. So this little excursion doesn't fit into my theory about modern optics nudging 1948 towards the digital world, but it is certainly worth noting.

At this point I should probably end with the social commentary promised. Exactly why is Wiener dwelling on the idea that "cybernetics" threatens middle class jobs, and why is Time so willing to press the point? I'm going to propose that it is intimations of the 1949 recession. Whether intentionally or not, this kind of talk is great for depressing wage demands.

Thanks, Obama.

With Shockley, Bardeenn and Brattain also in the news this month, it's time for another cut at how that came to happen, and some social commentary on top.

The actual history of the transistor is well enough known, although somewhat masked by insistence on heroic legend. Julius Lilenfeld carried German work over to the United States in 1926, but made the mistake of doing his work at a lab owned by Magnavox, which failed to make it through the Depression. Given how little public fuss he made about the subject, I imagine that Dr. Lilienfeld was well compensated by Bell for not getting in the way of its legend. The American Physical Society's account focusses on the corporate incentive to develop the transistor, and the "all-star team" that was assembled to create a semi-conductor based amplifier. Such an amplifier could boost telephone signals and potentially extend telephone networks. (Alaina Levine, writing for the APS, is apparently unaware that long distance was already a thing in 1948, or it might not say that "vacuum tubes were not very reliable, and they consumed too much power and produced too much heat to be practical for AT&T’s needs." It would be more accurate to say that something better was called for.

In Levine's version, William Shockley is also almost absent from the Bell Labs setting while Bardeen and Brattain take the first, crucial steps. Shockley is their director, but makes only rare appearances at the lab in New Jersey where his lesser partners are working on turning his brainstorm into working hardware. It was, crucially, because Shockley took their work and dramatically improved on it during two weeks spent in Chicago, attending the convention of the APS, that he was reinserted into the story. (A year later, he published the general form of their nascent semiconductor theory.

I am not pointing this out to take credit from Shockley. What I'm getting at is the strange absence of his war work. I get the vague impression that he might have been fired from the Antisubmarine Warfare Operations Research Group at Columbia University, since he is listed as doing naval operations research from 1942 until 1944, and then as an expert consultant to the office of the Secretary of War from 1944 to 1945, which sounds suspiciously as though it is not a real job. Random slander of the clearly-on-the-autism-spectrum dead aside, my point, such as I have one, is that Shockley was well acquainted with ASW work, and thus, presumably, with the quartz crystal transducers used in wartime sonar equipment. As John Moll's biographical memoir makes clear, wartime improvements in silicon and germanium point contact detectors made new experiments practical. I should mention here that Moll specifies point contact detectors because this was the specific application that the Shockley group was working on. Elsewhere he notes rectifiers, which I wanted to point out as evidence that I'm not entirely crazy!

So there's an absent presence here: the state. This is also true of Norbert Wiener, another of science's square pegs in round holes, who has somehow been extruded from MIT's effort to create a antiaircraft fire control system capable of accepting "lock on" from a radar. As I try to make heads and tails of early postwar American naval building, it seems as though the resulting Mark 56 Gun Fire Control System first went to sea on the Mitschers of 1949, although this must be wrong, because the tradition is that the '56 was ready in time to fight kamikazes. Anyway, official credit for the Mark 56 goes to Ivan Getting and Antonin Svoboda, and Svoboda returned to Prague in 1946. Getting's career gets lost in a haze of impressive CV line entries in the late Forties, but I wouldn't be completely surprised if he was at the door to show Wiener the way out in '47. As far as I can tell, Wiener then spent the rest of his life gassing about neurons being analog computers and spending the royalties from Cybernetics.

So we have here two developments in science that we are aching to connect, but which just do not seem to be coming together yet. Shockley, Bardeen and Brattain are not working on computing. Their semiconductor amplifier isn't a proof of concept, as we understand it looking back. It was the whole point of their research. A better amplifier was that important. The fact that it was a species of logic gate, and that it would form the basis of printed logical circuits doesn't even seem to have become obvious until people began to approach the problem as one of replacing vacuum tubes with a superior technology in actually existing digital computers.

Interestingly, there's another group of scientists who seem to be much closer to the problem.

|

| * |

Which, of course, there is. Light, or a wave of hot, expanding gas, is conveniently described by the wave equation, which I am just going to lift from Wikipedia like the laziest bastard who ever blogged:

where

This is the time-independent version, the Helmholtz equation, although if good old Herman would have recognised it in this form, I'm a monkey's uncle. You don't have to do any of this goofiness in analysing what happens when a regular light wave hits the centre of an optical lens. Simple ray tracing will do just fine. Unfortunately, that won't serve when you get close to the edge of the lens, and this is where old time glasses will start to show annoying fringes, while would-be atomic devices show backsplashing, neutron-spitting, white hot plutonium. (Which is not as much fun as it sounds.) The way to solve this is, well, to vary the thickness of the glass (or explosives) away from the simple geometric curve that serves in the centre. So get out a knife, and shave some stuff off, because, look, we're talking about physicists here. You want "subtle," go talk to a wizard or something. The trick is to figure out just how much to shave off to maintain the same solution as you reach the edge of the lens as at the centre. If only there were a way to turn a wave into a discrete pulse going in a specific direction, the way that a lens might be said to "decompose" a complex signal into a series of discrete pulses, adjust their direction, and recompose them into a new wave.

Which there is. Here, let me steal from Wikipedia again, since there is no way I am relearning this math after thirty odd years, and no way that you're going to sit still to read it if I did, or even could:

The Fourier transform is the purely mathematical version of what a lens does. And it is inherently digital.

My last technological appendix was on about this, too, discussing the lens in the Xerox 914 that makes it possible for an office machine to reproduce a legal sized document without the text curving up to meet the margins. I doubt that the work at Xerox had much to do with the national security sector's advance towards digital computing, but I strongly suspect that spy camera work does. Cold war secrecy makes it a bit hard to make out what comes between the RAF's F52 camera, which, as far as I can tell, was still the main system for PR Mosquitoes as of Christmas of 1948, but following up on that took me to some grognards trying to sort out a war surplus "F60," which seems to have been used to take photographic records of H2S displays:

|

| C. Colin Carron 2009 assuming that a Crown copyright has expired. Source. That's a camera mounted on an H2S CRT with a copy stand, by the way. It's to figure out where the bombs are being dropped. |

At this point I should probably end with the social commentary promised. Exactly why is Wiener dwelling on the idea that "cybernetics" threatens middle class jobs, and why is Time so willing to press the point? I'm going to propose that it is intimations of the 1949 recession. Whether intentionally or not, this kind of talk is great for depressing wage demands.

Thanks, Obama.

I feel like this is somehow relevant to your Vikings thread: https://twitter.com/TheDigVenturers/status/1103986396564463616

ReplyDelete